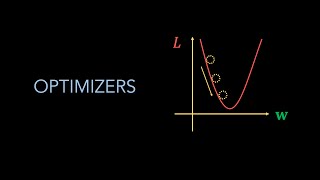

Nesterov Accelerated Gradient (NAG) Explained in Detail | Animations | Optimizers in Deep Learning

The acceleration of momentum can overshoot the minima at the bottom of basins or valleys. Nesterov momentum is an extension of momentum that involves calculating the decaying moving average of the gradients of projected positions in the search space rather than the actual positions themselves.

This has the effect of harnessing the accelerating benefits of momentum whilst allowing the search to slow down when approaching the optima and reduce the likelihood of missing or overshooting it.

Digital Notes for Deep Learning: https://shorturl.at/NGtXg

============================

Do you want to learn from me?

Check my affordable mentorship program at : https://learnwith.campusx.in

============================

📱 Grow with us:

CampusX' LinkedIn: https://www.linkedin.com/company/campusx-official

CampusX on Instagram for daily tips: https://www.instagram.com/campusx.official

My LinkedIn: https://www.linkedin.com/in/nitish-singh-03412789

Discord: https://discord.gg/PsWu8R87Z8

⌚Time Stamps⌚

00:00 - Intro

00:47 - What is NAG?

09:12 - Mathematical Intuition of NAG

12:52 - Momentum

17:59 - Geometric Intuition of NAG

24:39 - Disadvantages - (1)

25:55 - KERAS Code Implementation

27:30 - Outro