Variational Autoencoders - EXPLAINED!

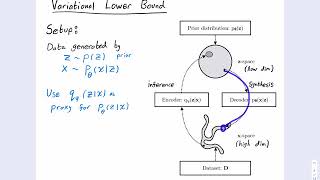

In this video, we are going to talk about Generative Modeling with Variational Autoencoders (VAEs). The explanation is going to be simple to understand without a math (or even much tech) background. However, I also introduce more technical concepts for you nerds out there while comparing VAEs with Generative Adversarial Networks (GANs).

*Subscribe to CodeEmporium*: https://www.youtube.com/c/CodeEmporium/sub_confirmation=1

REFERENCES

[1] Math + Intuition behind VAE: http://ruishu.io/2018/03/14/vae/

[2] Detailed math in VAE: https://wiseodd.github.io/techblog/2016/12/10/variational-autoencoder/

[3] VAE’s simply explained: http://kvfrans.com/variational-autoencoders-explained/

[4] Code for VAE python: https://ml-cheatsheet.readthedocs.io/en/latest/architectures.html#vae

[5] Under the hood of VAE: https://blog.fastforwardlabs.com/2016/08/22/under-the-hood-of-the-variational-autoencoder-in.html

[6] Teaching VAE to generate MNIST: https://towardsdatascience.com/teaching-a-variational-autoencoder-vae-to-draw-mnist-characters-978675c95776

[7] Conditinoal VAE: https://wiseodd.github.io/techblog/2016/12/17/conditional-vae/

[8] Estimating User location in social media with stacked denoising AutoEncoders (Liu and Inkpen, 2015): http://www.aclweb.org/anthology/W15-1527

Background vector for thumbnail created by vilmosvarga: https://www.freepik.com/free-photos-vectors/background