Multi Head Attention in Transformer Neural Networks with Code!

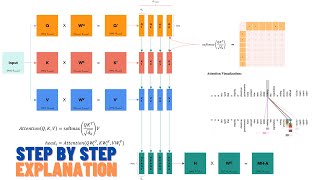

Let's talk about multi-head attention in transformer neural networks

Let's understand the intuition, math and code of Self Attention in Transformer Neural Networks

ABOUT ME

⭕ Subscribe: https://www.youtube.com/c/CodeEmporium?sub_confirmation=1

📚 Medium Blog: https://medium.com/@dataemporium

💻 Github: https://github.com/ajhalthor

👔 LinkedIn: https://www.linkedin.com/in/ajay-halthor-477974bb/

RESOURCES

[ 1🔎] Code for video: https://github.com/ajhalthor/Transformer-Neural-Network/blob/main/Mutlihead_Attention.ipynb

[2 🔎] Transformer Main Paper: https://arxiv.org/abs/1706.03762

[3 🔎] Bidirectional RNN Paper: https://deeplearning.cs.cmu.edu/F20/document/readings/Bidirectional%20Recurrent%20Neural%20Networks.pdf

PLAYLISTS FROM MY CHANNEL

⭕ ChatGPT Playlist of all other videos: https://youtube.com/playlist?list=PLTl9hO2Oobd9coYT6XsTraTBo4pL1j4HJ

⭕ Transformer Neural Networks: https://youtube.com/playlist?list=PLTl9hO2Oobd_bzXUpzKMKA3liq2kj6LfE

⭕ Convolutional Neural Networks: https://youtube.com/playlist?list=PLTl9hO2Oobd9U0XHz62Lw6EgIMkQpfz74

⭕ The Math You Should Know : https://youtube.com/playlist?list=PLTl9hO2Oobd-_5sGLnbgE8Poer1Xjzz4h

⭕ Probability Theory for Machine Learning: https://youtube.com/playlist?list=PLTl9hO2Oobd9bPcq0fj91Jgk_-h1H_W3V

⭕ Coding Machine Learning: https://youtube.com/playlist?list=PLTl9hO2Oobd82vcsOnvCNzxrZOlrz3RiD

MATH COURSES (7 day free trial)

📕 Mathematics for Machine Learning: https://imp.i384100.net/MathML

📕 Calculus: https://imp.i384100.net/Calculus

📕 Statistics for Data Science: https://imp.i384100.net/AdvancedStatistics

📕 Bayesian Statistics: https://imp.i384100.net/BayesianStatistics

📕 Linear Algebra: https://imp.i384100.net/LinearAlgebra

📕 Probability: https://imp.i384100.net/Probability

OTHER RELATED COURSES (7 day free trial)

📕 ⭐ Deep Learning Specialization: https://imp.i384100.net/Deep-Learning

📕 Python for Everybody: https://imp.i384100.net/python

📕 MLOps Course: https://imp.i384100.net/MLOps

📕 Natural Language Processing (NLP): https://imp.i384100.net/NLP

📕 Machine Learning in Production: https://imp.i384100.net/MLProduction

📕 Data Science Specialization: https://imp.i384100.net/DataScience

📕 Tensorflow: https://imp.i384100.net/Tensorflow

TIMSTAMPS

0:00 Introduction

0:33 Transformer Overview

2:32 Multi-head attention theory

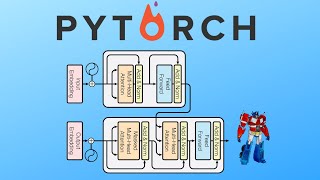

4:35 Code Breakdown

13:47 Final Coded Class